-

Overview

As a professional artist for over 30 years, I share the same concerns about AI as other creative professionals. But, I also understand that AI is here to stay, so I have been dedicating time to learn about and experiment with its capabilities in an ethical manner. My intent here is NOT to condone or condemn but study.

My focus has been incorporating AI into my traditional workflow. To use AI tools and my own trained models to build upon my initial sketches and paintings. To explore variations and refine my work while maintaining control over the final result and avoiding influence by others styles, artists, or outside influences.

-

Goal

I want to use AI to speed up my workflow in visual development. To render for me, so to speak. To sketch out my ideas and process them with AI image to Image tool sets and AI models trained on my work. To approach the process like my sketches are a scene I built in Maya, and AI is the renderer. To go from idea to fully realized image.

Just as important, is the testing of newer AI models and toolsets that can possibly filter out unwanted stylization and influences.

-

Tools

AI Base Model - Stable Diffusion

Platforms - Leonardo.ai and Getimg.ai

Pipeline - Stable Diffusion XL, DDIM sampler, and image to image. Any prompts used are a direct description of the source sketch image or simply the letter “A”.

My custom models were built on both the above platforms. On 40 to 100 of my own sketches per model.

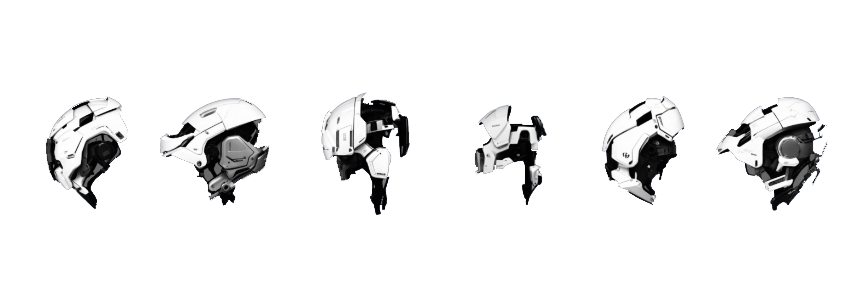

AI Generated Variants on a Theme

Simple prompts are used to shift thumbnails towards a specific theme. Prompt is as simple as “post-apocalyptic” but with most of the weight on the sketch

Thumbnails - Propagation of a single sheet of b/w sketches into multiple variants as well as varied themes influenced by prompts.

The goal here was simple. Create subtle variation of some thumbnails sketches. Upload a sheet of simple thumbnails, drawn in Procreate, into a Stable Diffusion image to image workflow. The prompt is simply the letter “A” where prompts are mandatory. The image influence strength is set high (image has more weight).

AI Generated Variants of Source Sketch

Source Sketch

Post-Apocalyptic city

Alien Planet with ships and buidlings

Jungle with waterfalls and colorful plants

Ice Planet

Rough Sketch Refined - Taking a speed painting concept to a fully realized image with AI

The idea here was to use AI to take a quick digital painting, that captures an initial idea, and basically “render” a final image of this idea. To maintain its intent, composition, and feel for the initial digital sketch. And, to explore variations and additional details within the concept that furthers the initial idea without losing its intent.

Source Sketch and AI Generated Variant

20 minute sketch/30 minutes - Sampling of 40 raw images generated using image to image

Source Sketch

Final Image-bash/Paint-over

2-3 hours - Raw AI output vetting, bashing, and final paintover

AI Generated Variants of Source Sketch

Final Paint-over

Custom AI Model Test - AI model creation by inputing abstract sketches I made with one of my fav brushes in Infinite Painter

Source Sketches for AI Model

B/W sketches and variations - Create around 40 to input into Custom AI creation tool at getting.ai. Processing time can take up to a few days to generate model.

Example 2

Initial image followed by application of my AI Model

Source Sketch

I then use the Image to Image workflow to apply my custom ai model. In this example, my ai model behaves like an ultra powerful PS filter when applied to a simple sketch. (I created the sketch with the same brush I used for the ai model. Just a coincidence)

Output From AI Model

A couple images showing minor influence of the model to the original sketch to major influence. Influence is adjusted by a slider and can cover a very large range.